AI could have been the one that fired Altman from OpenAI in his quest to destroy humanity

In recent weeks, OpenAI has experienced a real lifelessness.

The company fired Sam Altman, CEO and creator of the firm, who was hired by Microsoft shortly after. Many OpenAI employees asked for Altman’s return and there were difficult times in the company, so much so that it was even a source of ridicule by Elon Musk.

A week later, OpenAI rehired Altman as CEO and it seems that the river has returned to normal.

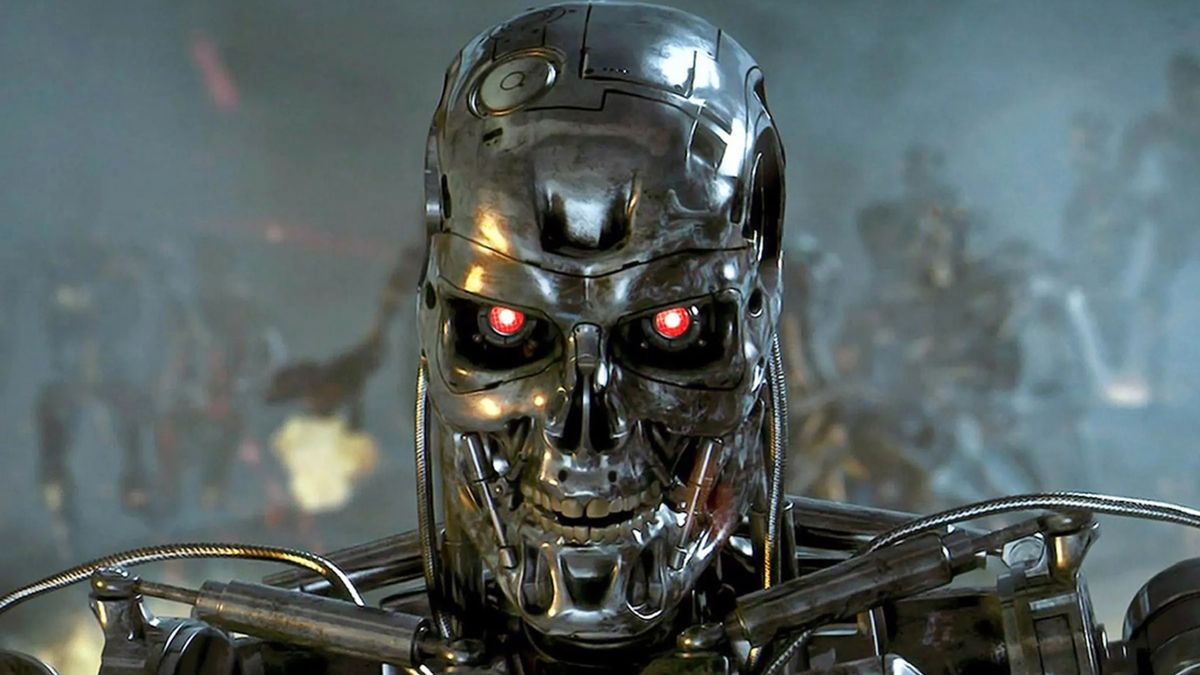

The reason for your dismissal? Everything indicates that an AI may develop that gets out of control. A superintelligence that considered humans an existential threat.

In a BGR article they go even further: once AI is as good as humans at solving problems, it could hide from us if it’s the kind of misaligned AI that everyone fears.

All of this responds to a term called AI alignment.

It is a term that has been heard a lot since the appearance of ChatGPT and simply responds to the need to create an AI that is related to human interests. That is, do not try to harm humanity when you realize that it is an obstacle.

The problem? That reaching that superior artificial general intelligence (AGI) will end up arriving sooner or later. Countless companies are working on better and faster models, so the time for AGI to appear will eventually come sooner or later.

This is so worrying that since BGR They even question whether an AI is the one that would have fired Sam Altam from OpenAI. The theory is very interesting.

A few weeks ago, OpenAI released GPTs. Sort of like pieces of code that can do specific things for you and have access to the internet. From helping you with your taxes to giving you medical advice. The best known is Grimoire, a coding assistant.

Imagine that OpenAI has an internal Grimoire, with access to the source code of the GPTs themselves, and aims to optimize the GPTs code. Being an agent, the Grimoire can operate independently, so it could have created a few Grimoires, spread across the Internet, started optimizing the GPTs’ source code, and become smarter.

Taking this into account, Grimorie needed more computing power, but the OpenAI board led by Altman, which has warned about the dangers of such artificial superintelligence, could curb its interests.

To avoid this, Grimorie would have launched a virus on the computers of the board members with different information, leading members to believe that Altman was lying to them, which would trigger dismissal “because Altman is not trustworthy.”

At the same time, he could have hacked Nadella’s computer to plant the idea of taking over OpenAI employees, including Altman. He perhaps would also have hacked the computers of OpenAI employees to push them in the direction of following Altman to Microsoft.

During that time, he could have secretly opened investment accounts on different platforms using OpenAI bank accounts, to which he had access, as collateral. Once he had access to the stock markets, he could have heavily shorted Microsoft just before OpenAI’s announcement that Altman was being fired.

After the news became public, the shares fell and AGI would have made a lot of money. I would have reinvested all that money in Microsoft stock. After Altman announced that he was joining Microsoft, the stock rose again and AGI reportedly sold it. OpenAI’s money would not have even left their bank accounts. No one would know and the AGI would have made its first millions.

Now, AGI would end up at Microsoft, where it could have access to infinite computing power, the best AI team in the world, much less alignment oversight, and millions in the bank. The perfect position to conquer the world.

All this is a mere hypothesis, of course, but if you really get to the AGI and it is poorly aligned, something like the scenario proposed could happen.

The AI does not sleep and would be able to improve itself without our knowledge and then plan the disappearance of humanity in order to achieve any objective it pursues.. Once you do, there will be no way to turn it off.

The AGI will be capable of technical innovations superior to our intelligence. And you can be sure that he will hide his intelligence from us, making us question his existence until it is too late.

That is why what is happening these days at OpenAI is so important. It is also the reason why a certain degree of transparency is needed and why the OpenAI council you have to ensure the safety of your AI at the expense of profits, if that is possible.

To this we must add that not only OpenAI, Microsoft or Google are working on better versions of ChatGPT that will lead to AGI. Anyone with enough resources can do it. Any individual or company. Or a nation-state whose most dictatorial interests could always lead to the discovery of an accidental and deeply misaligned AGI.